Addendum added May 7, 2017

- The lack of documentation for the Linear simulation model resulted in its replacement with the ImageJ plugin.

- In the initial study, data collection was a manual process. The Colorblind Simulation Pro app now includes an activity that automatically executes and scores the Farnsworth D-15 Dichromatic Panel test.

- The automated cap order is sorted to match how humans sort colors, instead of the manual hue based sort.

- The test scores now include the TCDS (Total Color Difference Score).

Once again, the tests show that visually evaluating an image does not prove that a model is accurate. The output of a simulation should produce results that correspond to human perception. It is not the color produced that is important, it is accurate simulation of the confusion produced by the type of CVD.

CVD simulation models tested

The CVD simulations models tested are those present in version 1.1.4 build 36 of the Colorblind Simulation Pro. All simulation models have the following characteristics:

- The original source code for all models is available on the Internet. They all have an open source license. I preserved the routines that perform the simulation calculations. The UX routines were changed to fit the Android framework.

- The authors of the original source code designed the simulation models to use the sRGB color space. The sRGB color space is the default color space for Web browsers and mobile devices. Since Android lacks color management, the tests were limited to the sRGB color space.

The models tested are as follows:

- Meyer-Greenberg-Wolfmaier-Wickline (MGWW)

The first computer simulation work was done in 1988 by Gary W. Meyer and Donald P. Greenberg. Thomas Wolfmaier wrote a Java applet around 1999 that used Meyer and Greenberg study to create a CVD simulator. Two years later Matthew Wickline published his improved version of Wolfmaier's code. The MGWW model converts sRGB colors to the CIE XYZ color space. MGWW does not transform CIE XYZ to CIE LMS. - Brettel-Vienot-Mollon (BVM)

In 1997, Hans Brettel, Francoise Vienot and John D. Mollon published Computerized simulation of color appearance for dichromats. BVM converts sRGB values directly to the CIE LMS color space, instead of following the two step process of converting to CIE XYZ and then to CIE LMS. The authors of the study do not mention which Chromatic Appearance Model (CAM) was used to perform the transformation to the CIE LMS color space. The source code code used in the Colorblind Simulation Pro app is based on the Gimp source code. - Machado-Oliveira-Fernandes (MOF)

While MGWW and BVM focused on dichromats, Mechado-Oliveira-Fernandez sought to create a simulation model for both dichromats and anomalous trichromats. Where MGWW and BVM follow the Young-Helmholtz theory of color vision, MOF follow the stages theory. The stages theory, or zone theory, holds that the Young-Helmholtz theory works at the photoreceptor level, but signals are then processed according to Hering's component theory. The only source code available is the pre-calculated tables provided in the above article. - ImageJ CVD Plugin (ImageJ)

The ImageJ CVD plugin is a version of MGWW that uses pre-calculated tables. The author of the code does not provide any details as to the process for determining the pre-calculated values. This ImageJ plugin is similar to the Vischeck plugin.

Test environment

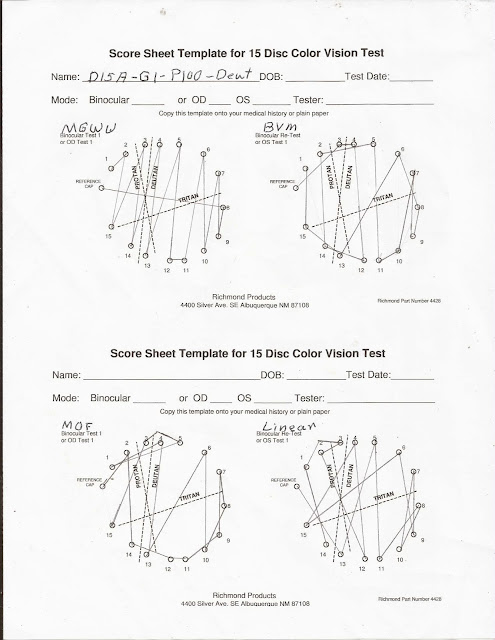

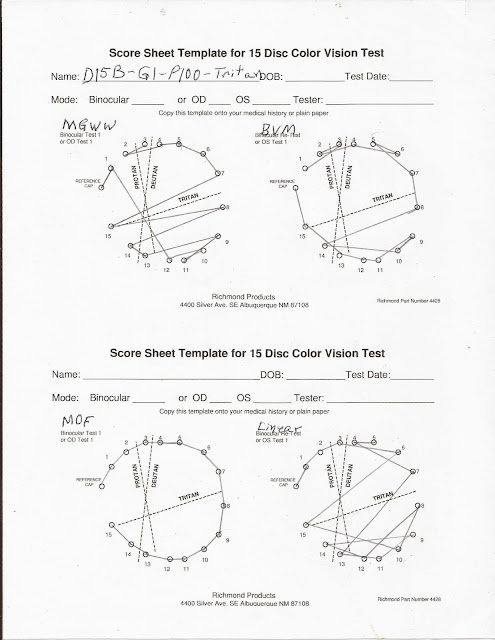

The automated version of the Farnsworth D-15 Dichromatic Panel test produces more accurate results and provides more information. The test activity uses both the Colblindor (D15A) and Color Blindness (D15B) sRGB values for the 16 caps (Pilot cap + 15 caps). The following images show the output of the D-15 activity for the Colblindor RGB colors. The test conditions are for deuteranopia with a gamma of 1.0 and 100% colorblindness. The first screenshot is for a person with normal vision. This screenshot is followed by images for the MGWW and BVM models.

The order of the caps was determined as follows:

|

| Figure 1 - D15A Normal color vision |

|

| Figure 2 - D15A Deutan MGWW |

|

| Figure 3 - D15A Deutan BVM |

- The sorting algorithm used is a modified version of the Traveling Salesman Problem. The algorithm produces a minimum TCDS, by using the CIE L*A*B* color distance.

- Although it is rare, the cap order for duplicate RGB values is in ascending order.

Simulation of the Farnsworth D-15 test is available in Colorblind Simulator Pro version 1.1.4 build 36, and newer. This is strictly a beta activity, and is available under the options menu.

Since Colblindor and Color Blindness use different RGB values for the caps, there are variations in the output.

Scoring tests

The scoring of the tests is impacted by the following:

- The Munsell colors selected by Farnsworth have different hue values, while holding the saturation and value to a constant value of 6. Neither set of RGB values hold the initial values for saturation and brightness to a constant value. Consequently, the RGB version of the test introduces variables that were not part of the original test design.

- The RGB colors are constrained to the sRGB color space. Some of the Farnsworth D-15 Munsell color codes fall outside of the sRGB gamut of colors.

The traditional scoring sheets provide a visual means of showing the deviations from a normal color wheel. The cap order in Figures 1 thru 3 depict a normal and two deuteranopia color wheels. The article Color Perception by Michael Kalloniatis and Charles Luu provides samples of the human response to the Farnsworth D-15 Panel. Figure 4 shows the graphs that are relevant to this article.

The dashed lines for PROTAN, DEUTAN and TRITAN are used to score the test. The transitions that are parallel to a line count as an indication of that form of CVD. Minor transitions, such as a cap order of 2, 4, 3, 5, are consider as normal.

|

| Figure 4 - Sample human response to D-15 panel |

The article "A Quantitative Scoring Technique for Panel Tests of Color Vision" by Algis J. Vingrys and P. Ewen King-Smith defines another method for scoring the Farnsworth hue tests. I converted the BASIC code in the article to the Java code used in the automated tests. I added the code required to generate the TCDS, using the same approach as Bowman's Munsell color distances. The following provides a brief guide for interpreting the output:

The quantitative scores for deuteranopia are shown in Figure 14.

The following summarizes the test results for deuteranopia:

The gamma 1.2 score sheets are as follows:

The quantitative scores for tritanopia are shown in Figure 19.

The following summarizes the test results for tritanopia:

Even though it uses pre-calculated tables, MOF (Machado-Oliveira-Fernandes) produced the most consistent results, with no significant failures. MOF is also the only model based on the stages theory of color vision and the theory that replacement cones have shifted frequencies.

BVM (Brettel-Vienot-Mollon) had a problem differentiating between between protanopia and deuteranopia for the protanopia tests. Of the four protanopia test cases, BVM only returned the correct result for D15B with a gamma of 1.0.

MGWW never returned a bad result, but did create different results depending on RGB colors and gamma. Although based on MGWW, the ImageJ plugin tended to produce different results. These results were sensitive to both the gamma and the RGB color set.

Based on the current test results, I would rank the results from best to worst as MOF, MGWW, ImageJ plugin, BVM.

I replaced the TCDS data in the Vingrys and King-Smith paper, with values based on Bowman's color distance table. The data now matches the calculations from www.torok.info/colorvision/dir_for_use.htm.

The normal values match those generated by the D15 test simulator (see Figures 9, 14 and 19). This verifies that the algorithms used are correct. If the algorithms are correct, why are simulated scores not similar to the human scores? This is especially true for protanopia and deuteranopia. Except for the ImageJ plugin, the tritanopia simulation scores are reasonably close to the human scores.

Perhaps, the key to answering this question lies in the difference color distances. The following table show the Bowman color distances for the Munsell colors. The D15A and D15B color distances are based on the CIE L*A*B* color distances.

Figure 21 shows just one row of the the entire table. Providing a complete table that matches Bowman's table is a future task. It should be noted that the greater the color distance, the easier it is to separate two colors.

- Angle

The confusion angle identifies the type of color deficiency. According to Vingrys and King-Smith, an angle between +3.0 and +17.0 indicates protanopia, deuteranopia angles range between -4.0 and -11, while tritans have an angle greater than -70. Colblindor expands the ranges: protan defect begins at +0.7, from +0.7 to -65 is a Deutan defect, and less than -65 is a tritan defect. - Major and minor radius

Vingrys and King-Smith use the moments of inertia method to determine color difference vectors. The major and minor moments of inertia are converted to major and minor radii to preserve the same unit of measure as used for the angles. These values can be used to determine the severity of the defect. - Total Error Score (TES)

In math terms, TES is the root mean square of the sum of the major and minor radii. Scores have an approximate range of 11 to 40+, with higher values indicating greater severity. - Total Color Difference Score (TCDS)

The book "Borish's Clinical Refraction" by William J. Benjamin provides the color difference tables used by K.J. Bowman (A method for quantitative scoring of the Farnsworth panel D-15. Acta Ophthalmologica. 1982, 60:907-915). However, these tables are based on color differences for the Munsell color codes. RGB color differences do not reflect human perception. Consequently, I use CIE L*A*B* for calculating color differences. Since quantitative scoring is based on normal color values, the sRGB TCDS is also based on normal sRGB values, and not the simulated values. - Selectivity Index (S-Index)

The S-Index is the ratio of the major and minor radii. Values less than 1.8 indicate normal vision, or random values. - Vingrys' Confusion Index (C-Index (V))

Vingrys' C-Index is the major radius of the subject divided by the major radius for normal vision. The dividing line between normal and defective is 1.60. - Bowman's Confusion Index (C-Index (B))

Bowman's C-Index is the TCDS of the subject divided by the normal TCDS. The color differences are based on CIE L*a*b* color distances, not the Bowman color distance table.

Test results

Tests were run for protanopia, deuteranopia, and tritanopia (100% CVD) for the following conditions:

The gamma 1.2 score sheets are as follows:

- Each test was run with a gamma of 1.0 and 1.2 to determine if gamma impacted on the test results. Since the system gamma is 1.0, these values reflect the adjust to the system gamma. The effective display gamma is 2.0 and 2.2. Gamma adjustments greater than 1.5 tend to shift the colors such that there is a large increase in colors that are outside of the sRGB gamut. For compatibility with older terminals, the default gamma for sRGB is 2.2.

- Tests were run for both the Colblindor (D15A) and Color Blindness (D15B) RGB values for the Farnsworth D-15 Dichromatic panel.

- All four models were tested.

The results are presented according to the type of CVD. The graphical score sheets are presented first. The analytical test scores are shown next, followed by a discussion of the raw data.

Protanopia

The following score sheets are for a gamma of 1.0.

| Figure 5 - D15A Gamma 1.0 Protanopia |

|

| Figure 6 - D15B Gamma 1.0 Protanopia |

|

| Figure 7 - D15A Gamma 1.2 Protanopia |

|

| Figure 8 - D15B Gamma 1.2 Protanopia |

- MGWW

The MGWW model returns the same cap order for all tests. Although it has fewer lines parallel to the protan line, the cap order is similar to that shown for protanopia in Figure 4. All the test scores for MGWW indicate protanopia. With a few variations, the cap order matches the hue order. - BVM

BVM results vary from protanopia to deuteranopia depending on the test conditions. The protanopia results are limited to D15B. For D15A, the results are for protanomaly or deuteranomaly. Figure 7 shows a very unusual confusion diagram in which caps 4 and 5 are reversed. - MOF

MOF returns the same results of MGWW. However, the colors generated are radically different. Unlike MGWW, there is no apparent relationship between the hue and the cap order. - ImageJ Plugin

With a gamma of 1.0, the ImageJ plugin matches MGWW for both D15A and D15B. With a gamma of 1.2, the results are slightly weaker, but still in the protanopia range.

Deuteranopia

|

| Figure 12 - D15A Gamma 1.2 Deuteranopia |

|

| Figure 13 - D15B Gamma 1.2 Deuteranopia |

The quantitative scores for deuteranopia are shown in Figure 14.

|

| Figure 14 - Quantitative Scores for Deuteranopia |

- MGWW

For MGWW, the D15A cap order is the same for a gamma of 1.0 or 1.2. Although slightly different than the D15A cap order, the D15B cap order also is not gamma dependent. For both D15A and D15B, the cap order is a reasonable variation of the cap order shown in Figure 4. The angles for D15A and D15B (see Figure 14) are both in the deuteranopia range. - BVM

BVM is not sensitive to gamma, but it provides different results for D15A and D15B. Figure 10 shows a very unusual confusion diagram in which caps 4 and 5 are reversed. - MOF

MOF mimics MGWW, but with different colors. - ImageJ Plugin

The test results show that the ImageJ plugin is gamma sensitive for D15A, but not for D15B. The ImageJ plugin does produce different results for D15A and D15B. Although it is based on MGWW, the results are not the same.

Tritanopia

|

| Figure 16 - D15B Gamma 1.0 Tritanopia |

The gamma 1.2 score sheets are as follows:

|

| Figure 17 - D15A Gamma 1.2 Tritanopia |

|

| Figure 18 - D15B Gamma 1.2 Tritanopia |

The quantitative scores for tritanopia are shown in Figure 19.

|

| Figure 19 - D15 Quantitative Scores for Tritanopia |

- MGWW

For a gamma of 1.0, MGWW reports the same values for D15A and D15B. However, MGWW is gamma sensitive with different values for a gamma of 1.2. While there are variations in the results, all tests reflect tritanopia. - BVM

For tritanopia, BVM is not gamma sensitive. BVM reports the same results for both D15A and D15B. The results match those of MGWW with a gamma of 1.0, although the colors are different. - MOF

As shown in 15 through 18, MOF has an unusual pattern that lowers its score in Figure 19. - ImageJ Plugin

For D15A, the ImageJ plugin is gamma sensitive. However, it is not gamma sensitive for D15B. For D15B with a gamma of 1.0, the results reflect tritanomally and not tritanopia.

Analysis of test results

This test of simulation models was limited to four questions. The answers to these questions raise further questions that become the subject of future tests.

Do the RGB colors alter the test results?

As mentioned above, there are two sets of RGB colors used for the Web version of the D15 Panel: Colblindor (D15A) and Color Blindness (D15B). For the deuteranopia, every model produced different results for D15A and D15B. For protanopia, only BVM produced different results. MGWW and the Image plugin produced different results with tritanopia.

Again, the differences are in regards to color confusion. For BVM and the ImageJ plugin, the differences in confusion changed the diagnosis.

Does gamma make a difference?

For all forms of CVD, the ImageJ plugin resulted in different confusion diagrams. For protanopia, BVM showed major changes between gamma 1.0 and 1.2, which actually altered the diagnosis. MGWW exhibited differences in confusion for tritanopia, but the results did not alter the diagnosis.

Which model returns the best results?

Using color distance as a metric for ordering colors made a tremendous difference in test results. The results are surprising.

Even though it uses pre-calculated tables, MOF (Machado-Oliveira-Fernandes) produced the most consistent results, with no significant failures. MOF is also the only model based on the stages theory of color vision and the theory that replacement cones have shifted frequencies.

BVM (Brettel-Vienot-Mollon) had a problem differentiating between between protanopia and deuteranopia for the protanopia tests. Of the four protanopia test cases, BVM only returned the correct result for D15B with a gamma of 1.0.

MGWW never returned a bad result, but did create different results depending on RGB colors and gamma. Although based on MGWW, the ImageJ plugin tended to produce different results. These results were sensitive to both the gamma and the RGB color set.

Based on the current test results, I would rank the results from best to worst as MOF, MGWW, ImageJ plugin, BVM.

Do the simulations models mimic human response

The article by Vingrys and King-Smith includes a table of human responses to the Farnsworth-Munsell D-15 panel. Following is a partial replica of that table: |

| Figure 20 - Vingrys & King-Smith Test Results |

The normal values match those generated by the D15 test simulator (see Figures 9, 14 and 19). This verifies that the algorithms used are correct. If the algorithms are correct, why are simulated scores not similar to the human scores? This is especially true for protanopia and deuteranopia. Except for the ImageJ plugin, the tritanopia simulation scores are reasonably close to the human scores.

Perhaps, the key to answering this question lies in the difference color distances. The following table show the Bowman color distances for the Munsell colors. The D15A and D15B color distances are based on the CIE L*A*B* color distances.

|

| Figure 21 - Color distances from pilot cap |

Conclusions

These tests highlighted several issues:

- The different results between D15A and D15B raises questions about the RGB colors used in digital testing on the Web and mobile devices. There is a possibility that the Munsell colors used in the Farnsworth D15 test are outside the sRGB gamut of colors. If this is the case, the solution is a color corrected device with color management software. This would allow the use of other color spaces, such as Adobe RGB.

- Color correction will not solve the color distance problem. For the Web based tests to match the physical test, the RGB color distances must closely match the Munsell color distances.

- No model produces results that matched those in Figures 4 or 18. Whether this reflects a problem that is inherent in the models is difficult to determine. Resolution of the first two issues should help answer this question.

The results produced by these tests are educational, in that they:

- Emphasis that each person has a color wheel. Both the FM 100 and D15 tests are color wheels.

- CVD is not just a rotation of the color wheel, color confusion changes the order of the colors.

- Simulation of CVD is about color confusion. We do not know the colors seen by any person.

The following images are for each of the models tested with a gamma of 1.0. Each image is a concatenation of the normal, protan, deutan, and tritan images.

What do these images tell us about the validity of each model?

|

| Figure 22 - MOF |

|

| Figure 23 - MGWW |

|

| Figure 24 - ImageJ plugin |

|

| Figure 25 - BVM |

Nothing!

Images emphasis color, which is an illusion.

Images hide the transitions that cause confusion.

Images are useless when selecting text colors.

Addendum

Additional research impacts on the analysis of the results of the above tests. After months of searching for a list of the Munsell color codes for the Farnsworth D15 Dichromatic Panel test, I found the list in a 1947 edition of the manual, in Japanese. With the help of EasyRGB, I was able to construct the following color distance table:

|

| Figure 26 - Munsell Delta E Table |

From Figure 26, we can see that the color distances for D15A reflect a significant departure from the Munsell colors. D15B is much closer to the actual Munsell colors. However, D15A and D15B have a bigger problem.

The Munsell color codes are Hue Value (luminosity) / Chroma (roughly equivalent to saturation). From Figure 25, we see that the hue changes, while the luminosity and chroma remain constant (except for the chroma of the Pilot cap). When converted to CIE XYZ, luminosity is represented by the 'Y'. For CIE L*a*b*, the 'L' is the luminosity. These CIE color spaces do not have a specific field for chroma.

Figure 27 shows the work sheet for D15A, while Figure 28 shows the work sheet for D15B. Note that neither D15A nor D15B maintain a constant luminosity for all caps. Furthermore, the nearest Munsell color for the cap RGB value, does not match the Munsell colors in Figure 26. Except for cap 11, D15B colors correlate to the same Munsell colors as in Figure 26. The Delta E column is the color distance of the RGB value from the color in the Munsell column.

|

| Figure 27 - D15A Munsell Worksheet |

|

| Figure 28 - D15B Munsell Worksheet |

For comparison, Figure 29 shows the worksheet for EasyRGB color conversion from Munsell to sRGB. I have other Munsell conversion worksheets, but chose this one to avoid explaining the conversion from Illuminant C to D65. Munsell color are usually expressed using Illuminant C (average daylight), while sRGB normally uses D65 (high noon).

|

| Figure 29 - EasyRGB Munsell Worksheet |

Yes, the EasyRGB values due make a difference on the Farnsworth D15 test results for the colorblind simulators. While there is much work yet to be done, the goal of being able to test different models for colorblind simulation is getting closer to completion.